Authors

72-year-old Radhakrishnan, a retired employee from Coal India ltd, was sleeping in his Kozhikode flat when he woke up to his mobile buzzing at 5:45am. It was an unknown number. As someone who does not answer unknown calls, he did not give it much thought. Unable to sleep longer, he decided to get out of bed and switch on his mobile data, which is when he noticed several texts from the same unknown number.

“It was early in the morning, and I was not entirely alert,” Radhakrishnan tells Newschecker. The person on the other side introduced himself as an old colleague. Seeing that it was a well known colleague whom he had known for 40 years, he began responding, and soon, the conversation moved onto an audio call.

“He spoke to me in English. The voice was the same as I remembered. My friend is Telugu and even the accent was spot on. I asked him why he was calling me from a new number, to which he said that he had to get a new one after his sim card had become faulty. He started by asking if I was still in Kozhikode. The conversation continued to my daughter’s whereabouts and then our mutual contacts. At one point he even corrected me when I gave the wrong information about a mutual friend,” Radhakrishnan says.

He spoke to me in English. The voice was the same as I remembered. My friend is Telugu and even the accent was spot on

PS Radhakrishnan from Kozhikode who fell victim to the deepfake scam

But there was something odd from the get go. The calls kept dropping frequently. “Perhaps he was setting the ground for the really short video call,” Radhakrishnan says. But finally, the ‘friend’ got to the point- he needed Radhakrishnan to help him financially as his sister-in-law was in the hospital for an operation.

“He asked me to transfer ₹40,000. He gave me a number and an UPI ID to transfer the money claiming that it belonged to a bystander at the hospital in Mumbai where the operation was going to be performed. I wondered if it was safe in this age of scams to transfer money to an unknown person. He got on a video call in a bid to assure me. I saw him clearly. It was my friend. The lips moved properly while he spoke, the eyes blinked like they normally would. The call dropped in 30 seconds. He said that he was at the airport and waiting to board the flight.”

Radhakrishnan obliged the request and transferred the money, only to be confronted with another request to send an additional ₹30,000.This is when he felt something was off.

“I told him that I wasn’t sure my account had sufficient funds. He asked me to ask around and arrange the money. I hung up, and I called up my friend on the number that I had saved on my phone. It was then that I realised that I had been scammed.”

Scammers posed as victim to further dupe his contacts

Radhakrishnan was not the only one who got the call. Soon after he realised what happened, Radhakrishnan contacted the cyber cell and registered a complaint. “I posted about my experience in various friends and family groups. I realised many others had also received similar calls. Two colleagues in the Ranchi group said that they also spoke to someone claiming to be the same colleague for almost half an hour. But they made some excuses and did not transfer the money. Shockingly, another colleague, who was in Pune, called me up and said that he spoke to “me” for half an hour yesterday and “I” made the same request, on the same pretext of a sick sister-in-law who was due for surgery. It was simultaneous. All of us got the call almost around the same time- in the early morning. But thankfully, none of them lost their money,” he says.

Shockingly, another colleague, who was in Pune, called me up and said that he spoke to “me” for half an hour yesterday and “I” made the same request, on the same pretext of a sick sister-in-law who was due for surgery.

PS Radhakrishnan from Kozhikode who fell victim to the deepfake scam

Is there a big Nexus?

The police team investigating Radhakrishnan’s complaint traced the call to Goa. It was found that the money was transferred to an account in Gujarat, which was then transferred to four other accounts in Maharashtra. While the accounts are frozen, the investigation has revealed more disturbing insights- there were at least 8 transactions of ₹40,000 to the same account in Gujarat, on the same day Radhakrishnan was scammed.

So is this an indication of a larger scam nexus? “Not all those transactions can be presumed to be fraudulent. There are several companies that create zero balance accounts for a variety of purposes which are operated by others, where such transactions keep taking place. We cannot say with finality that all these transactions were benefits of crime,” says Harishankar, SP, Cyber Operations, Kerala police.

Also Read: Fake Indian Express Interview With Adani Promotes Crypto Scam

AI generative scams: A look at the global scenario

Many similar cases were reported by American media earlier this year. Jenniffer DeStefano, a resident of Phoenix, Arizona, shockingly revealed that she got a ransom call demanding USD 1 million from kidnappers claiming to be holding her daughter Brianna hostage. On the line, she heard someone sounding exactly like her daughter. A 911 call and a frantic effort to reach Brianna revealed that she had not been kidnapped and that she was the victim of a virtual kidnapping scam involving cloned audio.

In another instance, Ruth Card, a 73-year-old from Regina, Canada, got a frantic call from her grandson Brandon demanding cash for bail after he was allegedly picked up by the police. It was not until the banker pulled Ruth and her husband into his office, and informed them that another client had received an eerily similar phone call that turned out to be a scam, that the old couple realised that they had almost been conned.

Another 73-year-old Montreal resident received a similar scam call from her ‘grandson’. The person over the phone convincingly spoke in a mixture of English and Italian just like her grandson would have. But it is believed that the caller used an AI tech to mimic her grandson’s voice.

Beyond the headlines

While such scams were reported in other parts of the world, this model of scamming, where video and audio elements were used via generative AI, was relatively new for the Kerala Cyber Cell. So much so that the whole office of the Kozhikode cyber cell came out to meet Radhakrishnan when he went to register the complaint, to ascertain if really did see this ‘friend’ in the scam call.

Media organisations reported on the scam and the subsequent investigation into it with an almost alarmist glee. Given AI’s status as the trending topic of the year, (it was declared the word of the year 2022 by Emergency Spanish Foundation supported by EFE Agency and the Spanish Royal Academy) a crime committed using AI seems to have been an irresistible story, guaranteed to attract readership.

But as the investigation revealed, this particular case doesn’t seem to involve very sophisticated AI tools, says Harishankar, SP, Cyber Operations, Kerala Police. “They seemed to have used a free/low paid software,” he says, adding, “There are a wide variety of deepfake tools available online. Using a thirty-minute recording of a person, an AI tool can analyse movements and expressions of each syllable and use it to create an AI video. Longer footage spanning 5 hours can help us make an even more convincing deepfake. 10 hour long footage would be even better. These require a lot of computational power and expensive technology. But the whole affair is very resource intensive. High end tools are not free. Developing 3D deepfakes requires large computation and it is not easy. The free tools usually work on a 2D image, where they use an image to make a 2D deep fake as opposed to a 3D. The current case appears to be one such instance.”

The scammers seemed to have used a free/low paid software

Harishankar, SP, Cyber Operations, Kerala Police

Speaking on how the scammers could have impersonated the voice, he adds, “The voice modulation could have been done with a regular audio editing tool, by making minor alterations to the frequency or some other aspects.”

But while the AI tool may not be very sophisticated, it is the social engineering that has helped them convincingly dupe Radhakrishnan in this case. Social engineering is a manipulation technique where scamsters try to piece together details about an individual by analysing publicly available information online, and use it to further target the individual into revealing specific information or performing a specific action.

Also Read: Is Natraj pencil company offering work from home jobs?

How likely are you to be the target?

Should you be worried that you may soon get a call from scamsters posing as someone known to you? Yes & No- the answer is complicated.

Since the nature of AI scams involves a combination of deepfake, voice cloning and social engineering, it is a resource intensive process, making ‘automation’ of the scam difficult. “While there is scope for such a scam to strike a large population in the future, it is not possible in today’s scenario. It is one thing to scam one individual or a small group of people, but for anything to attain a ‘mass scam’ level, it has to become automatable,” says cyber security expert Hitesh Dharamdasani.

AI scams lack a mass delivery system, which means they are currently not as easily scalable as some of the previous scams that relied on SMS, email phishing links, or social media profile cloning. As a result, AI scams may be less widespread, but it’s essential to stay vigilant as technology continues to evolve, and new methods of exploitation may emerge in the future.

While there is scope for such a scam to strike a large population in the future, it is not possible in today’s scenario

Hitesh Dharamdasani, cyber security expert

Echoing Dharamdasani’s views, Harishankar says, “The scam has not picked up big time (sic) in India because of the technological limitations. AI tool efficiency depends on the volume of data that the system is trained on. These require a lot of computational power and expensive technology. Moreover, there is the vernacular aspect that these technologies cannot overcome. Major tech companies can probably develop such deepfakes in convincing scale but at the moment, the current AI scams that are being reported are too basic and naive, but the problem is that our people are even more naive.”

How to be safe?

That said, it is always a good idea to be safe, and ensure that one is not hoodwinked by scammers under any circumstances.

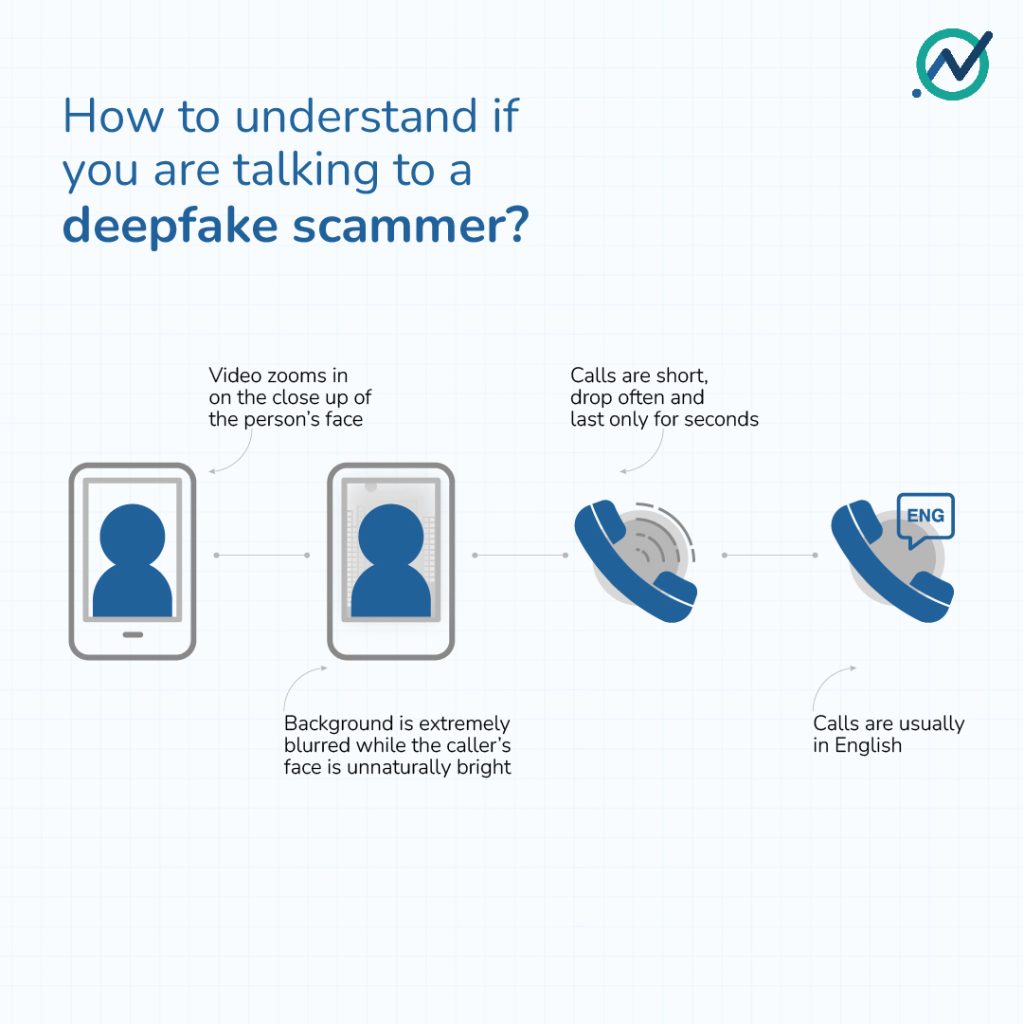

In hindsight, Radhakrishnan says, there were several things off about the call. “The video call, though convincing, only showed a very zoomed-in, close up of my friend’s face. Nothing but his face was visible on the screen. The face was also very bright- unusually bright, unlike regular video calls,” he said. “If I were to do things differently, I would have hung up and called him up on the number that I have,” he adds.

Telltale signs

Another aspect to take note of is the really short duration of the calls and the language, points out Harishankar. “Deepfake technology is still not advanced enough to mimic the vernacular languages and dialects in India. These technologies usually work in English. So most people who are targeted are usually upper middle class, retired folks who use English.”

While one can watch out for some telltale signs, cyber security experts unanimously warn that the only way to keep oneself safe is by suspecting the intent of any caller.

“If we look at this case, the victim called his contact after transferring the money. He was unsuspecting initially. The only way to be safe is by looking at such calls from an angle of suspicion from the start,” Shankar V, Head of Cyber Security, Hexaware Technologies says.

“Only when the user discerns whether what he is seeing or hearing is real or not, can one be safe. Even being suspicious won’t help, you have to assume that you are being told a lie and prove to yourself that it is not a lie. Start with the assumption that you are being scammed until you verify that it is otherwise. This way you will filter out most things from the get go,” says Dharmadasani.

What Are The Legal remedies?

As per the current legal framework, there is no specific law to address the growing concerns around AI. AI related matters will have to be considered under the IT Act 2000.

With AI backed crimes slowly finding its way to the headlines, the time is right for the government to step in and create legislation measures to make AI more safe and accountable, feels AI law expert and SC advocate Pavan Duggal.

“The IT act is 23 years old. AI had not come into the central lives of people when it was being framed. The act is not equipped to deal with the problems brought in by generative AI. So the sooner we bring in legislation, the better,” Duggal says.

IT act not equipped to deal with the problems brought in by generative AI

Pavan Duggal, AI law expert and SC advocate

In a written reply to the Lok Sabha in April 2023, the Electronics & IT Minister Ashwini Vaishnaw, had earlier said that the government was not considering any laws to regulate the growth of AI in India. But the minister of state for electronics and information technology, speaking at an event to mark the 9 years of Modi government, said that the government was in fact going to regulate AI to prevent harm for ‘digital nagriks’.

How is the rest of the world dealing with AI’s potential threats?

The comments came even as Sam Altman of OpenAI called for regulation of companies operating in the AI sphere. The European Union (EU) is leading the way forward with the AI Act, which is expected to have an impact on how AI is ‘built, deployed and regulated for years to come’. Beijing is also working on draft regulations on AI development that is expected to be finalised by the end of July. While Brazil’s senate is closely looking at the recommendations on regulating AI, South Korea is also reportedly looking at a regulatory framework for AI.

But why is legislation important to regulate cyber crimes associated with AI? Many argue that the creation of tools as powerful as AI without adequate safeguards leaves avenues open for cyber crime to take on a larger scale.

Cyber crimes with artificial intelligence as the modus operandi are becoming the preferred model for scammers, who are making creative use of AI for financial fraud.

Pavan Duggal, AI law expert and SC advocate

In India, according to the existing regulations that address AI, the companies that manufacture such tech can also be held liable and booked for financial fraud, as AI itself is not a legal entity. This further complicates the debate around AI, raising the question- who is responsible for the way AI is used? “Law should define the various rights and duties of the stakeholders of the AI sphere, and provide remedy for victims of such crimes,” Duggal says.

“With the pandemic, we are now looking at the golden age for cyber crimes. Cyber crimes with artificial intelligence as the modus operandi are becoming the preferred model for scammers, who are making creative use of AI for financial fraud. We will have to watch out,” he warns.

Like what you read? Let us know! Drop a mail to checkthis@newschecker.in if you would like us to do a deep dive on any scam that you think needs attention. If you would like us to fact-check a claim, give feedback or lodge a complaint, WhatsApp us at 9999499044 or email us at checkthis@newschecker.in. You can also visit the Contact Us page and fill out the form.